Market Research Report: Deepfake AI

- nipunkapur5678

- Oct 26, 2024

- 3 min read

Behind the Tech:

A large amount of data is required to create a deepfake. This comprises photographs or videos of the person from various perspectives and lighting conditions. Generative Adversarial Networks are made up of two neural networks- a generator and a discriminator, trained simultaneously. The generator generates photos or videos, while the discriminator determines the authenticity.An autoencoder network is taught to compress and then rebuild a picture, which is commonly employed in the development of deepfakes. Faces in the source and target photos are recognised and aligned to ensure uniform orientation and position.The source face is encoded into a different space using the autoencoder's encoder component. The latent representation is given into a decoder trained on the target face, which transforms the source face to match the target's facial features and expressions.The created face is blended with the original video frame. To ensure a flawless integration, colour correction, lighting adjustments, and, on occasion, manual editing are required.If the deepfake incorporates video, the audio (if present) must be synchronised with the mouth movements of the created face.

Barriers to Entry:

1) Deepfake technology demands a thorough understanding of machine learning, large language models and computing skills. It also requires abilities in computer vision and image processing. It is hard to replicate/ learn these skills for individuals.

2) The requisite deep learning models require powerful GPUs or TPUs, which can be prohibitively expensive. Managing and storing huge datasets for training models adds to the cost and complexity.

3) Creating high-quality deepfakes requires large databases of photos or videos of the target individuals. Obtaining such databases can be challenging.

4) The hefty initial expenses for R&D, computer infrastructure, and data collecting make getting funding difficult, especially considering the controversial nature of the technology.

Global Markets:

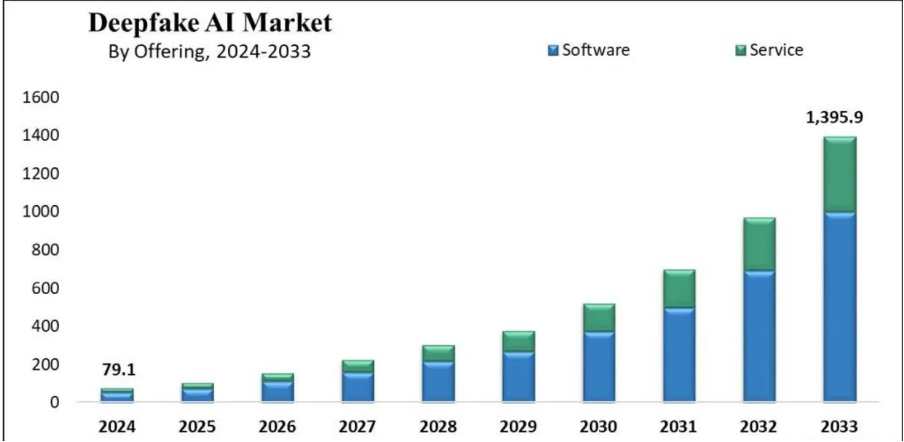

The Global DeepFake AI Market is poised for exponential growth, with a projected value of USD 79.1 million by the end of 2024, forecasted to reach USD 1,395.9 million by 2033, reflecting a remarkable CAGR of 37.6%. This surge is driven by the increasing sophistication and accessibility of AI technology, coupled with the expanding digital communication platforms that provide fertile ground for the dissemination of deepfake content.

From 2019 to 2023, the deployment of deepfake AI solutions increased dramatically across verticals such as education, customer service, marketing, and so on, hence supporting market growth. Deepfake AI technologies are increasingly being used in education in several nations, including the United States, India, and China.

Key global companies include Intel, DuckDuckGo’s AI, Gradient AI, Primeau Forensics, Cogito Tech, Apple Inc., Microsoft, Google, Deepware, Deepface Lab, Reface AI, Buster AI, Kairos, Quantum Integrity, D-ID, etc.

Surging Deepfake Scams Open New Doors for Deepfake Detection Solution Companies - Globally, deepfake technology has evolved, making it easier for scammers to create realistic audio and video content. These scammers impersonate trusted characters to fabricate proof and deceive others through manipulated media, resulting in successful schemes. This industry in itself has reached $15.5 billion from last year’s $5.5 billion and is expected to grow at a CAGR of 38.3% from 2024-2029.

Key Players and moat:

FakeBuster, a fake detector released by the Indian Institute of Technology (IIT) Ropar in 2021, detects if videos of participants in a video call are real or manipulated. FakeBuster uses screen recordings of video conferences to train deep-learning models to identify fake videos and participants.

Face-NeSt dynamically chooses between a range of features based on their relevance to the context to identify face manipulation.

Gujarat-based start-up Kroop AI has developed a detector called VizMantiz. "It is positioned for BFSI (Banking, Financial Services, Insurance) and social media platforms.

Recent Developments

In February 2024, Paravision launched Paravision DeepFake Detection, an advanced solution developed to combat identity fraud and misinformation.

In February 2024, Meta announced plans to identify and label AI-generated images to address concerns surrounding DeepFake proliferation.

In January 2024, McAfee Corp. introduced Project Mockingbird, an AI-powered DeepFake audio detection technology, to defend against phishing attacks.

In November 2023, Google partnered with the Indian government to address the spread of DeepFake videos and misinformation online.

In November 2022, Intel launched Real-Time DeepFake Detector, leveraging FakeCatcher technology to analyze video pixels with 96% accuracy.

Sources:

Comments